Sam Altman, the CEO of OpenAI, is wary of making predictions about the future of artificial intelligence. But if he’s prodded long enough, he’ll at least engage with the question of when a “superintelligence” smarter than human beings might emerge.

“I would certainly say by the end of this decade, so, by 2030, if we don’t have models that are extraordinarily capable and do things that we ourselves cannot do, I’d be very surprised,” he said in an interview with the Axel Springer Global Reporters Network, of which POLITICO Magazine is a part.

Altman made his comments in Berlin, where he received this year’s Axel Springer Award.

The tech billionaire also weighed in on how AI is likely to reshape the economy, whether AI would treat humans like ants and why he and other Silicon Valley heavyweights have warmed to President Donald Trump.

This conversation has been edited for length and clarity.

Artificial intelligence is developing rapidly. When exactly do you think there will be a superintelligence that is smarter than humans in all respects?

I think in many ways GPT5 is already smarter than me at least, and I think a lot of other people too. GPT5 is capable of doing incredible things that many people would struggle with or find very impressive. But it’s also not able to do a lot of things that humans could do easily. And I think that will be the course of things for a while, where we will see that AI systems can do some things incredibly well, struggle with some others, and humans use these tools and bring their sort of human insight, creativity, ingenuity to bear in ways that are really important.

I expect, though, the trajectory of the capability progress of AI to remain extremely steep. We've seen just in the two years or three years since ChatGPT has launched, how much more capable the models have gotten. And I see no sign of that slowing down. I think in another couple of years, it will become very plausible for AI to make, for example, scientific discoveries that humans cannot make on their own. To me, that'll start to feel like something we could properly call superintelligence.

And do you have an exact prediction of the year in which you expect this superintelligence to emerge?

One of the things that I have learned continuously is, although we can say the ramp will be very steep, it’s difficult to be very precise that, you know, it'll happen this month or this year. But I would certainly say by the end of this decade, so, by 2030, if we don't have models that are extraordinarily capable and do things that we ourselves cannot do, I'd be very surprised.

Many experts believe that entire job profiles will disappear, from accountants to bank advisers. In your opinion, what percentage of today's jobs will simply disappear in the foreseeable future?

Well, within 30 years, I would expect a lot of change. But in 30 years, jobs change all the time. Think about the jobs that we did 30 years ago that may not exist at all today, or new jobs that were kind of difficult to imagine 30 years ago that are now commonplace today. I remember reading a statistic once that about every 75 years, half the jobs in society change over. That's even without AI. It may happen. I expect it will happen faster now.The thing that I find useful is to think about the percentage of tasks, not the percentage of jobs. There will be many jobs where a lot of what it means to do that job change. AI can do things much better. It can free up people to do more and different things. There will, of course, be totally new jobs. And many existing jobs will entirely go away to be replaced by these new jobs. But I think the more interesting thing is, of everyone's job, what percentage of the tasks you do every day will be done by AI? And I can easily imagine a world where 30, 40 percent of the tasks that happen in the economy today get done by AI in the not very distant future.

You became a father this year. What education would you advise your son to pursue so that his job won't simply be replaced by AI in 30 years?

The meta-skill of learning how to learn, of learning to adapt, learning to be resilient to a lot of change. Learning how to figure out what people want, how to make useful products and services for them, how to interact in the world.

I'm so confident that people will still be the center of the story for each other. I'm also so confident that human desire for new stuff, desire to be useful to other people, desire to express our creativity, I think this is all limitless. In all these previous technological revolutions, people wonder, rightly so, what are we all going to do? In the industrial age, these machines came along. We watched them do the things that we used to do and said, what would be the role for us? And each new generation uses their creativity and new ideas and all of the tools the previous generation built for them to astonish us. And I'm sure my kids will do the same.

You sound very optimistic, but of course there are also AI critics who see mainly the dark side and the dangers. For example, the famous AI researcher Eliezer Yudkowsky, who says that the relationship between superintelligence and humans is roughly the same as the relationship between humans and ants. We don't think about whether we should destroy an anthill. We just do it if it's in our way. So how big is your personal fear that AI will eventually view us as ants and simply destroy us?

I've heard many people describe many different versions of what the relationship between an AI and humanity will be. The one that has always been my favorite is: My co-founder, Ilya Sutskever, once said that he hoped that the way that an AGI would treat humanity or all AGIs would treat humanity is like a loving parent. And given the way you asked that question, it came to mind. I think it's a particularly beautiful framing.

That said, I think when we ask that question at all, we are sort of anthropomorphizing AGI. And what this will be is a tool that is enormously capable. And even if it has no intentionality, by asking it to do something, there could be side effects, consequences we don't understand. And so it is very important that we align it to human values. But we get to align this tool to human values and I don't think it'll treat humans like ants. Let's say that.

Critics accuse you of transforming Open AI from a non-profit institution that was supposed to research the risks of AI into a commercial enterprise, and of partially disregarding the security risks in the process. Do you agree with this criticism? Does it bother you? Or do you think that sometimes you just have to forge ahead if you want to make progress?

First of all, we still have a non-profit entity, and we always will. I hope that we will have, I believe we will have, the best resourced and hopefully the most impactful non-profit of all time. And this is very important to our mission. Also important to our mission is the governance role of this and ensuring that we stick to our mission and that we prioritize safety and the well-being and maximum benefit of humanity.

We’ve obviously made some mistakes, and as we understand this new technology we’ll make more in the future. But on the whole, I’m extremely proud of our team's track record on figuring out how to make these services safe, broadly beneficial and widely distributed. One of our core beliefs is that if we figure out how to build this tool, align it with human values, and then put it out into people’s hands and have them express all of the things they want to do with this, that will be great for the world and is deeply in accordance with our mission.

In Europe, there is currently a lively debate about the AI Act to regulate artificial intelligence. What is your view on this? Are the rules good? Are there too many rules, or should they simply be scrapped?

Obviously, that is a question for the European people and the European policymakers. I met with a lot of German companies yesterday, and they did all express both concern about overregulation, but also hope that regulation can be sensible and protect the European people and the world. And I am also hopeful for a good balance there.

You also met with the German chancellor. What did you take away from that conversation?

I was very impressed. We had a great discussion about the need to build infrastructure in Germany to offer AI services in Germany by Germany for Germany. We are very excited about our recent announcement yesterday with SAP and Microsoft, to offer a sovereign cloud for the German public sector to use frontier models and maintain sovereignty.

You say you want to build infrastructure in Germany. The problem is that electricity in Germany is about three times as expensive as in the U.S. and five times as expensive as in China. Is Germany even an attractive location for OpenAI?

So certainly the energy costs are a challenge for AI. However, we had also a good discussion about how to address the energy needs of AI. And more than that, I think that use of AI will be one of the best uses of energy, whether it's used in Germany, or you run on servers that are somewhere else because you don't want to build the data centers here, which would be a very valid choice.

The importance of delivering AI in Germany to German businesses and German consumers is very important. Germany is our biggest market in Europe. It's our fifth biggest market in the entire world. Virtually all young German people use ChatGPT. So AI is here and people are getting value from it. And we'll keep doing that and we'll address the energy challenges.

What would be your recommendation for Germany on how to reduce energy prices? Don't you think the nuclear phase-out is completely crazy from your perspective?

Look, again, this is up to the German people and I don't understand all of the sort of trade-offs locally. I personally believe that nuclear energy, advanced fission, the whole set of approaches is one of the most promising approaches to energy and that's something that the world should pursue more.

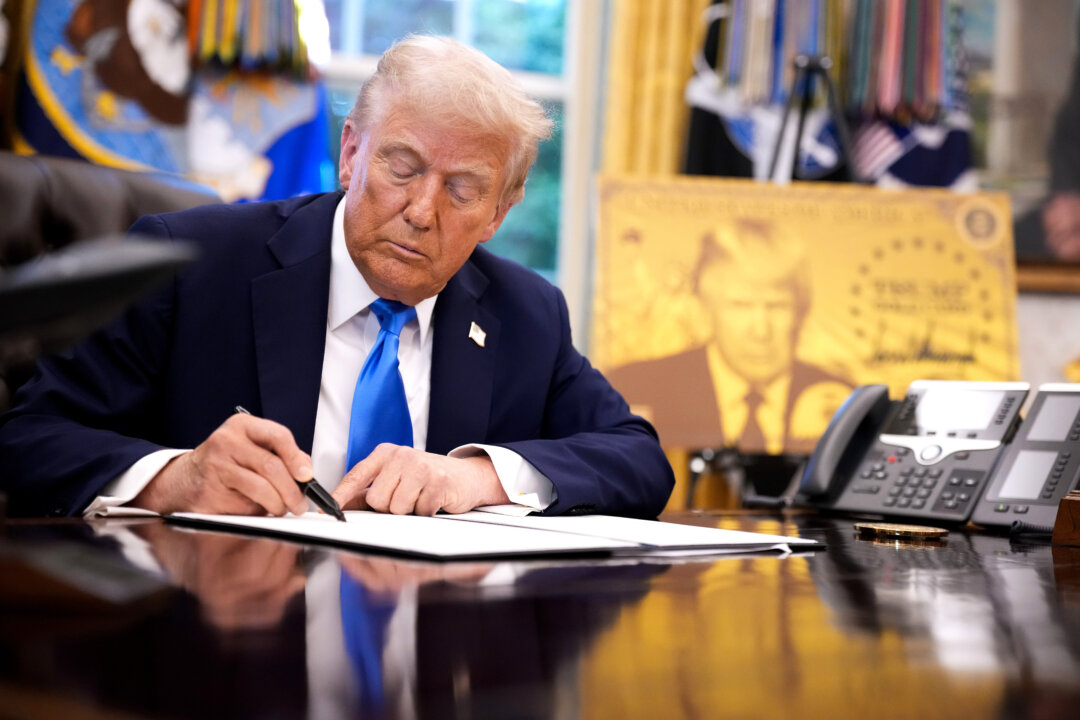

In the tech industry in the U.S., there has long been a liberal-democratic tone. Now it's striking that many tech gurus and tech bosses are also showing their support for Donald Trump. I believe you were at the White House the day after his inauguration, where you announced a major initiative. How do you explain this shift in vibe, this political shift in the tech industry? Why is everyone suddenly so keen to be seen with Trump?

First of all, I think the tech industry should work with whoever the American president is. But in this specific case, I think there have been some welcome policy changes. The ability to build infrastructure in the United States, which has been quite difficult and quite important to companies like ours, President Trump has done an amazing job of supporting. And a more general pro-business climate and pro-tech climate has been also a welcome change.

The U.S. is very polarized. I lived there for a few years myself and witnessed how heated the atmosphere is. What do you think of the idea of simply letting artificial intelligence govern instead of a U.S. president in the future?

I don't think people want that anytime soon. What I expect, though, is that presidents and leaders around the world will use AI more and more to help them with complex decisions. But I think we all still want a human signing off on that at some point.

One last question to finish up. You mentioned earlier how many people in Germany are already using ChatGPT. I took a closer look at that. Many Germans even get relationship advice from ChatGPT. Have you ever asked your own bot for help with relationship issues?

I don't use it as much for that as other people do. I've tried it, but no, that's not one of my big personal use cases. It's clearly something a lot of people use it for.

.png)

English (US)

English (US)